Exploring New Frontiers in Compute and Data Methods

June 07, 2021 | Susan Tussy

Addressing new methods and techniques for scale and performance in today’s world of data-driven discovery

Mountains of data are being generated faster than ever before, and there is an ever-growing need to manage this exponential growth. IDC projected that 59 Zettabytes would be created and consumed worldwide in 2020, and 175 Zettabytes by 2025. Compare this to just 18 Zettabytes in 2018. In order to manage these massive volumes of data it is paramount to address not only raw compute requirements, but also the methods and techniques used to rapidly, reliably and securely discover, access, transfer, store, and re-use the data.

Globus Labs is a research group that spans the Department of Computer Science at the University of Chicago and the Data Science and Learning Division at the U.S. Department of Energy’s (DOE) Argonne National Laboratory. Globus, together with its users, help the research group identify new challenges faced by the scientific community, while researchers at Globus Labs work to create novel solutions. Researchers at Globus Labs frequently prototype new services; and if a service shows particular promise, it may be transitioned to Globus for productization. The Globus product team then builds out the service to meet the needs of a broader audience and adds features and functions to operate and support the service at scale.

“The continuing close collaboration between Globus Labs and Globus is tremendously exciting”, states Ian Foster, director of the Data Science and Learning division at Argonne, and Arthur Holly Compton Distinguished Service Professor, Department of Computer Science at University of Chicago. “Together we work to accelerate the work of scientists who are working on the world’s most pressing issues, from climate change to global pandemics and energy security. These applications are also rich sources of problems for computer science students, and so it’s a real win-win situation. By combining the computing power that is available in the exascale era with new techniques and methodologies, we can enable new scientific breakthroughs that would not otherwise be achievable.”

Some Current Research Projects

funcX is one project for which Globus Labs received funding in 2020 from the National Science Foundation. funcX builds upon the Globus platform, and on Parsl, a Python library for developing and executing parallel programs created at the University of Chicago, Argonne National Laboratory, and the University of Illinois. funcX enables researchers to dispatch computations to wherever makes the most sense, as opposed to having a program run entirely on a single system. With the diverse range of computing environments available today, researchers can distribute their workloads to different systems through funcX.

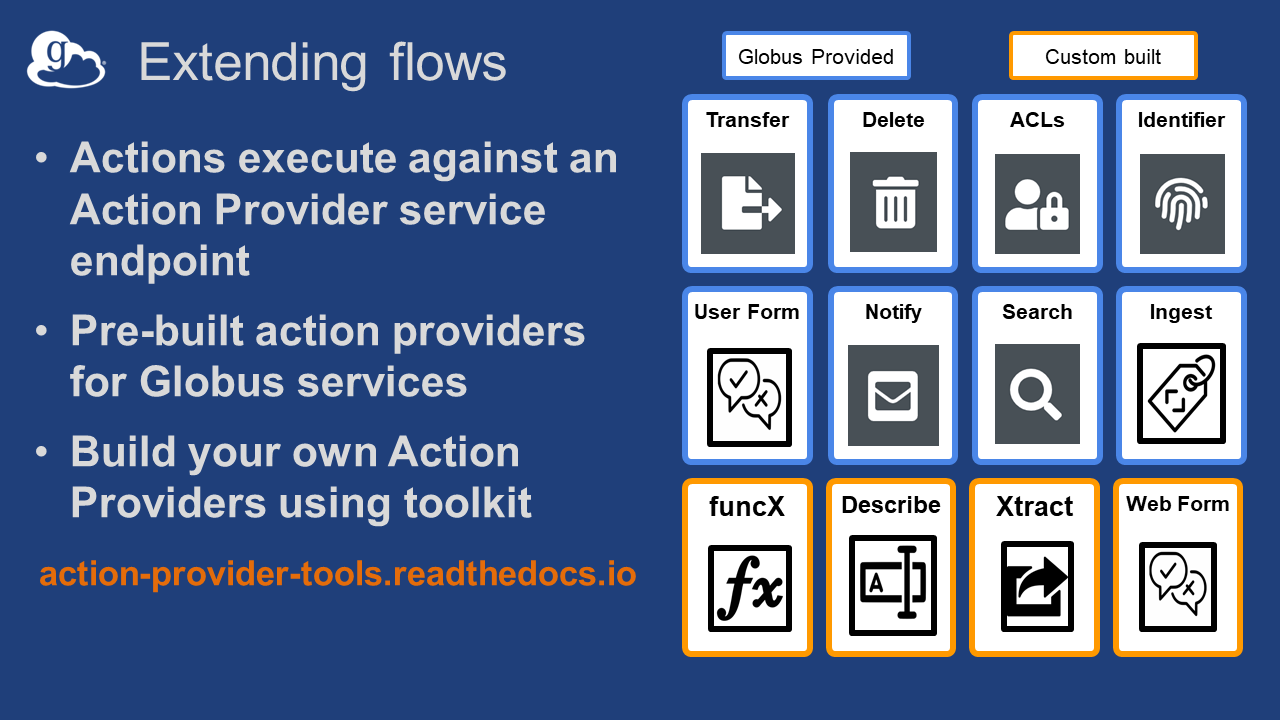

Globus Flows is another Globus Labs project. Data management issues become particularly evident in large scale experimental science projects, and typically researchers are allocated short periods of time to use expensive instruments that are in high demand. Automation can reduce inefficiencies and enable more science. This project is creating a data-driven platform that can automate data management best practices regarding replication, publication, indexing, analysis and inference. Most tasks for processing newly generated instrument data can be automated, with human intervention available as needed—for example, to review initial image quality and adjust an instrument before running a new experiment. The Globus automation platform services can be applied to any experimental, storage, and compute infrastructure, and can be extended by administrators to define automated task sequences or “flows” that link action providers to respond to new events.

While funcX is currently deployed only in a few controlled environments, Globus Flows is available for use by any Globus subscriber, and is already being used at scale in production environments at multiple institutions, including DOE Office of Science User facilities at Argonne National Laboratory – the Advanced Photon Source (APS) and the Argonne Leadership Computing Facility (ALCF). Argonne researchers have leveraged both funcX and Flows to develop a flow that links beamlines at the APS with supercomputers at ALCF, enabling rapid analysis of the crystal structure of COVID-19 proteins. This flow uses Globus to transfer images from APS to ALCF’s Theta supercomputer, where analysis and processing are performed via funcX. Computations executed via funcX then extract metadata about hits, identify crystal diffractions, and generate visualizations depicting both the sample and hit locations. The raw data, metadata and related visualizations are then published to a portal hosted at the ALCF, where they are indexed and made searchable for reuse.

In a recent experimental campaign, 19 samples were analyzed across nearly 1,500 flows over the course of three, 10-hour runs on the APS beamline, during which more than 700,000 images were processed on Theta. The resultant data were published to the data portal and used to guide subsequent experimental work and configurations. Without Flows and funcX this computer-in-the-loop experiment would not have been possible.

Learn more about Globus Flows

Original article on how Argonne researchers use Theta for real-time analysis of COVID-19 proteins can be found here

Related Content

Department of Energy

The Energy Department's 17 National Labs tackle the critical scientific challenges of our time -- from combating climate change to discovering the origins of our universe -- and possess unique instruments and facilities, many of which are found nowhere else in the world. They address large scale, complex research and development challenges with a multidisciplinary approach that places an emphasis on translating basic science to innovation.